Why Content Authenticity Helps Agents Even More Than Humans

The internet is awash in misinformation. For humans, this creates confusion. For AI agents, it creates broken workflows. Content authenticity is the key to reliable agent execution.

digital integrity | content authenticity | decentralized trust

The internet is awash in misinformation. For humans, this creates confusion. For AI agents, it creates broken workflows. Content authenticity is the key to reliable agent execution.

If humans can't tell what's real anymore, how can we expect autonomous agents to?...

Centralized content verification doesn't work when everyone can run their own AI. The solution isn't better watermarks—it's building trust infrastructure that makes authenticity an emergent property of network effects.

As artificial intelligence agents become more capable and more autonomous, a foundational question is emerging in both technical and ethical terms:...

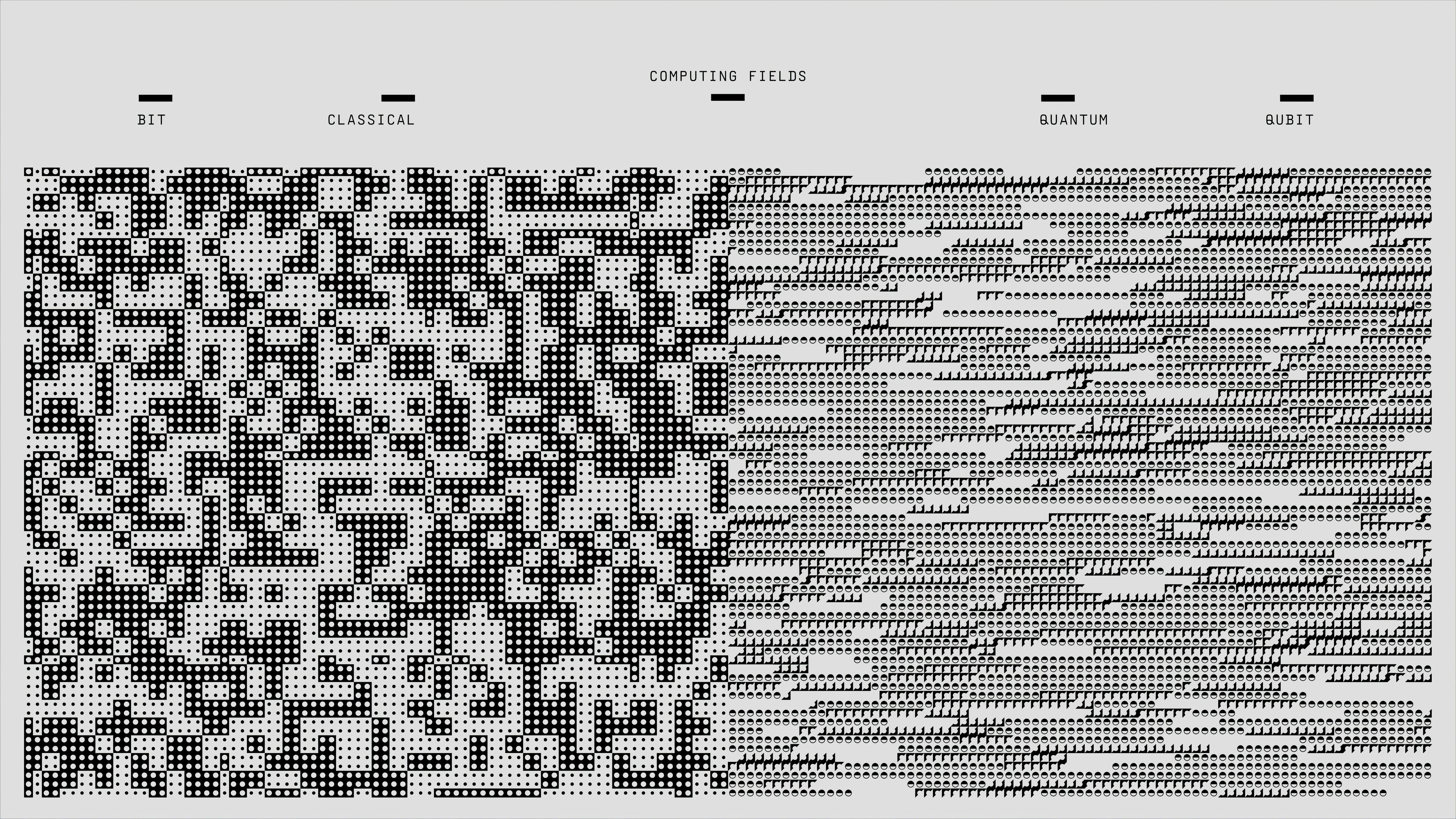

Exploring three fundamental approaches to digital trust: PKI hierarchies, PGP anarchy, and democratic trust anchors in the Noosphere ecosystem

Revisiting the intersection of AI-generated content, digital authenticity, and trust infrastructure in light of recent developments in AI and culture

Building a foundation for deterministic trust decisions with verifiable metadata standards and policy engines

Everyone agrees content authenticity matters, but unlike software supply chain security, it hasn't had its SolarWinds moment. Is it a vitamin, an aspirin, or perhaps a gold standard?

Anthropic just dropped Opus 4.1, and alongside it, the new Claude Code agents feature — and honestly, the whole thing feels like a case study in mixed signals....

How cryptographic proofs and verifiable trust are becoming essential infrastructure for publishers in the digital age

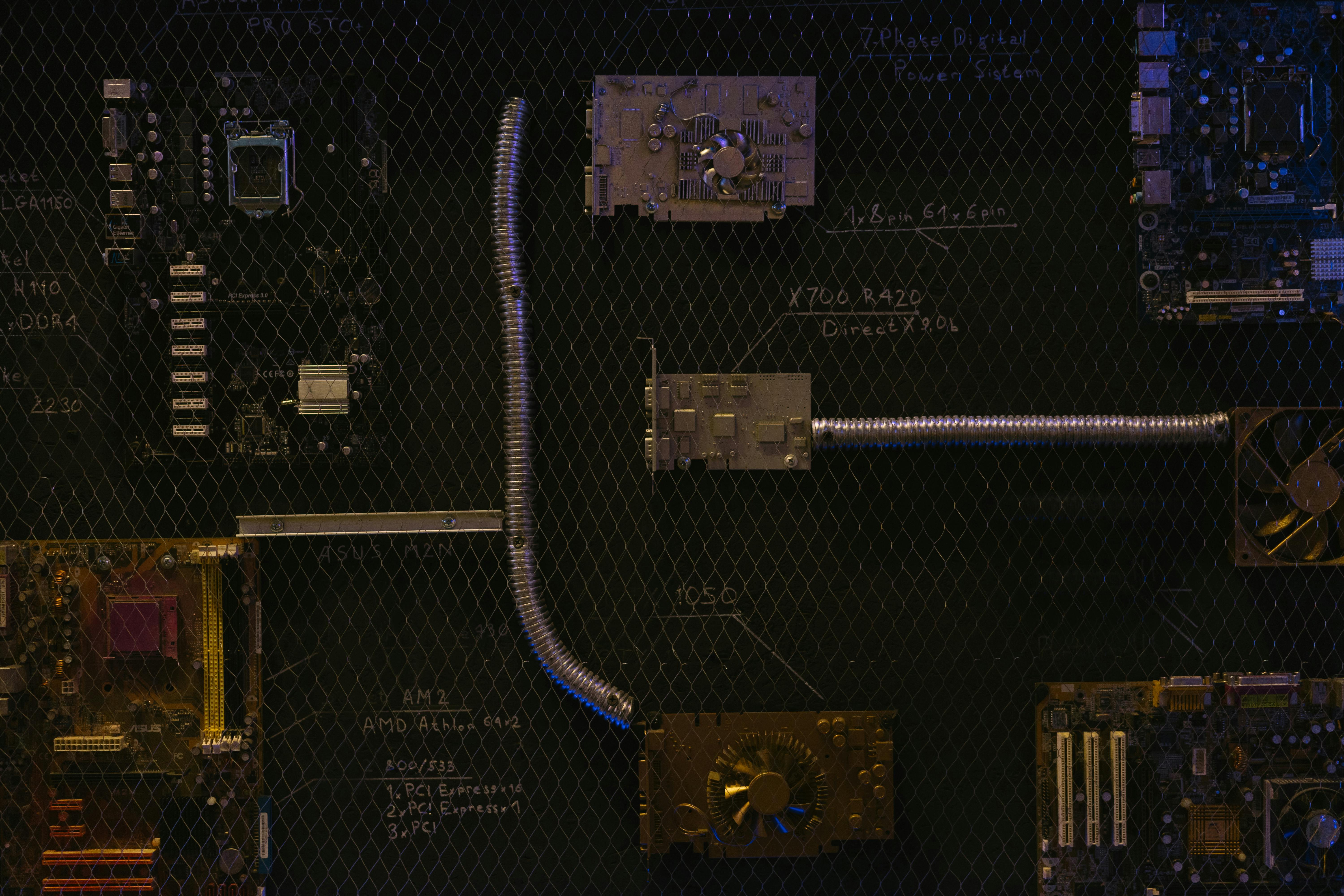

Understanding digital integrity as the foundation of trust in the digital world through cryptographic proofs and verifiable processes

From search visibility to verifiable trust, signed JSON‑LD turns your blog posts into portable, archival, and agent-ready content.

In today's conversations about AI agents, the prevailing paradigm is delegation. But this framing is misleading. Agents are autonomous, and we need to design trust accordingly.

How the Model Context Protocol is falling into the same complexity trap as SOAP, and why SSH is the simple solution we already have

As we continue to develop Noosphere's trust infrastructure, we've been thinking deeply about what it means to build truly decentralized systems. The web was originally designed to be decentralized, bu...

While much of the focus on media today is about mainstream versus social platforms, digital journalism is quietly undergoing a transformation. Local journalism, in particular, is showing remarkable re...

Trust infrastructure has always been arborescent—hierarchical, brittle, and prone to capture. What if we built it like a rhizome instead? Deleuze and Guattari's 'A Thousand Plateaus' offers a blueprint for truly decentralized trust.